I was looking at nodeJS servers, how they work and ways to scale them. I came across a best practices repo and it said: “Be stateless, kill your Servers almost every day“.

I’ve always been skeptical of docker and containers – granted I haven’t used them extensively but I’ve always thought of them as needless abstractions and added complexity that make the developer’s and system administrator’s lives harder.

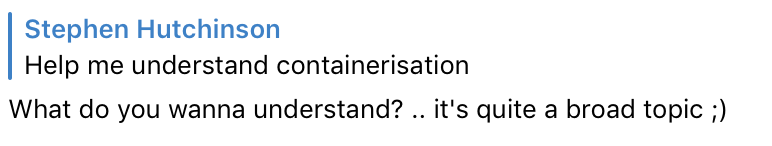

The tides have pushed me towards it though…so I asked a friend to Help me understand containerisation.

Help Me Understand Containerisation

Going back to the article I was reading it said: Many successful products treat servers like a phoenix bird – it dies and is reborn periodically without any damage. This allows adding and removing servers dynamically without any side-effects.

Some things to avoid with this phoenix stategy is:

- Saving uploaded files locally on the server

- Storing authenticated sessions in local file or memory

- Storing information in a global object

Q: Do you have to store any type of data (e.g. user sessions, cache, uploaded files) within external data stores?

Q: So the container part is only application code running…no persistance?

A: in general i find it best to keep persistence out of the container. There’s things you can do .. but generally it’s not a great idea

Q: Does it / is it supposed to make your life easier?

A: yeah. I don’t do anything without containers these days

Q: Containers and container management are 2 seperate topics?

A: yeah. I don’t do anything without containers these days

I think the easiest way to think of a container is like an .exe (that packages the operating system and all requirements). So once a container is built, it can run anywhere that containers run.

Q: Except the db and session persistance is external

A: doesn’t have to be .. but it is another moving part

A: the quickest easiest benefit of containers is to use them for dev. (From a python perspective..) e.g.: I don’t use venv anymore, cause everything is in a container

so .. on dev, I have externalized my db, but you don’t really need to do that

Q: Alright but another argument is the scaling one…so when black friday comes you can simply deploy more containers and have them load balanced. but what is doing the load balancing?

A: yeah .. that’s a little more complicated though .. and you’d be looking at k8s for that. (For loadbalancing) Usually that’s k8s (swarm in my case) though .. that’s going to depend a lot on your setup.E.g.: I just have kong replicated with spread (so it goes on every machine in the swarm)

Q: If you are not using a cloud provider and want this on a cluster of vm’s – is that hard or easy?

A: Setting up and managing k8s is not easy. If you want to go this way (which is probably the right answer), I would strongly recommend using a managed solution. DO (DigitalOcean) have a nice managed solution -which is still just VMs at the end of the day.

Q: What is spread?

A: Spread is a replication technique for swarm – so it will spread the app onto a container on every node in the swarm. Swarm is much easier to get your head around – but it’s a dying tech. K8s has def won that battle… but I reckon the first thing you should do is get comfortable with containers for dev.

Q: Everything easy and simple has to die

A: In fairness it’s nowhere near as good as k8s.

Q: ah looks like https://www.okd.io/ would be the thing to use in this case

A: yeah .. there’s a bunch of things out there. Okd looks lower level than I would like to go 😉

Q (Comment): Yip, I mean someone else manage the OKD thing…and I can just use it as if it were a managed service

In my head the docker process would involve the following steps:

- Container dev workflow

- Container ci workflow

- Container deployment

- Container scaling

A note on the Container Service Model

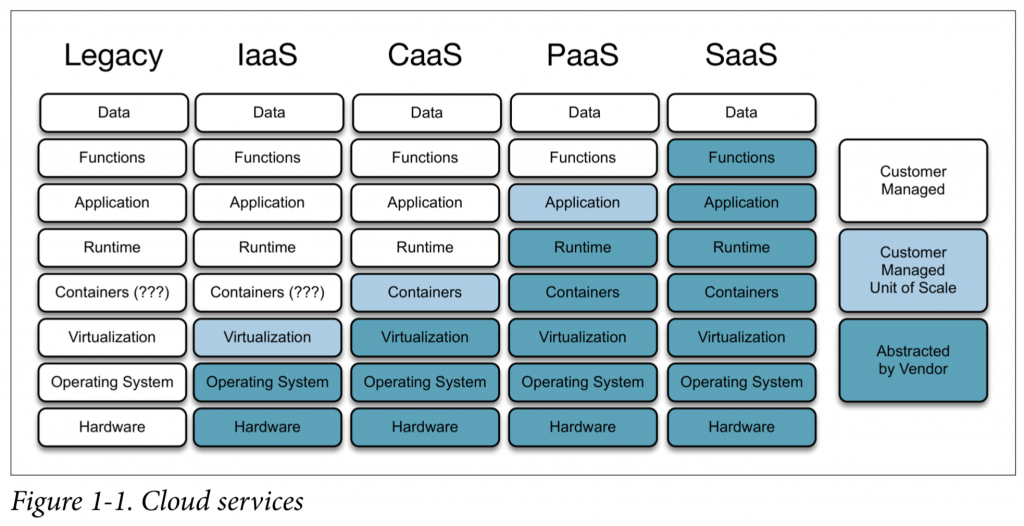

So what Containers really are are somewhere in between Infrastructure as a Service (Iaas) and Platform as a Service (Paas). So you are abstracted away from the VM but still manage programming languages, libraries on the container.

It has given rise to Container as a Service.

The Container Development Process

A: ok .. you can probably actually setup a blank project and we can get a base django setup up and running if you like?

Steps

Make a Directory and add a Dockerfile in it. What is a Dockerfile? A file containing all the commands (on commandline) to build a docker image.

The

docker buildcommand builds an image from aDockerfileand a context.

More info on building your docker file in the Dockerfile reference

The contents of the Dockerfile should be (There are other Dockerfile examples available caktusgroup, dev.to, testdriven.io

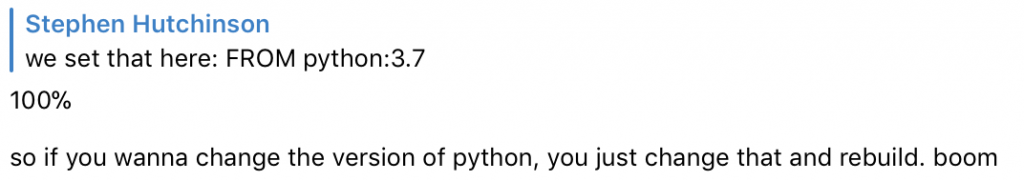

# pull official base image

FROM python:3.7

LABEL maintainer="Name <stephen@example.com>"

# set environment variables

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

RUN mkdir -p /code

WORKDIR /code

# Install dependencies

COPY requirements.txt /code/

RUN pip install -U pip

RUN pip install --no-cache-dir -r requirements.txt

# Copy Project

COPY . /code/

EXPOSE 8000For this to work, we need the requirements.txt to be present. So for now just put in django.

While looking at different DockerFiles I found these best practices for using docker. It seems like the base image is important and people recommend small ones. There is another article commenting that alpine is not the way to go. For now it is not worth wasting time and you don’t need to worry about the different image variants.

PYTHONDONTWRITEBYTECODE: Prevents Python from writing pyc files to disc (equivalent to python -B option)

PYTHONUNBUFFERED: Prevents Python from buffering stdout and stderr (equivalent to python -u option)

Then create a docker-compose.yaml in the same directory. Docker compose is a tool for defining and running mulitple container docker applications. A way to link docker containers together. Compose works in all environments: production, staging, development, testing, as well as CI workflows.

So we have created a docker image for our django application code, but now we need to combine that with a database or just set some other settings. Take a look at the compose file reference.

Add the following:

version: '3.7'

services:

web:

build: .

command: python manage.py runserver 0.0.0.0:8000

volumes:

- .:/code

ports:

- "8000:8000"

This part:

volumes:

- .:/code

Maps your current directory on your machine (host) to /code inside the container. You do that in dev – not in production.

Mapping your local volume to a volume in the container means that changes made locally will cause the server to reload on the docker container.

In docker compose remember to list your services in the order you expect them to start.

If you don’t have an existing docker project in your directory then run:

docker-compose run --rm web django-admin.py startproject {projectname} .You want to get in the habit of initialising projects in the container

Next get it up and running with:

docker-compose up

It then should run in the foreground, the same way a django project runs. You can stop it with ctrl + c.

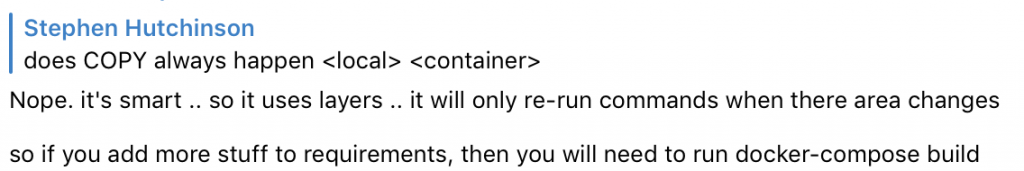

A note on making changes to the Dockerfile or requirements.txt

So next time you run docker build it will be faster or even skip some things. However if you add a new dependency to requirements you must manually

docker compose build

It is important to commit your Dockerfile and docker-compose.yaml to git.

Adding Postgres

Adding Postgres

To add a postgres db, add the following to docker-compose.yaml:

db:

image: postgres:10.10

Now do a docker-compose up again. This will add the postgres db docker container without persistence.

The command will run the build for you, but in this case it is just pulling an image.

Running in the Background

You can keep the containers running the background with using detached mode:

docker-compose up -d

then you can tail the logs of both containers with:

docker-compose logs -f

if you want to tail the logs of just one container:

docker-compose logs -f db

so once that’s up, your database will be available with the alias db (the name of the service), similar to a hostname. It is very handy, cause you can keep that consistent throughout your environments.

Your containers might look like this:

$ docker container list

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3db1218ca0ae postgres:10.10 "docker-entrypoint.s…" 8 minutes ago Up 4 seconds 5432/tcp vm-api_db_1

14e26e524ded vm-api_web "python manage.py ru…" 19 hours ago Up 4 seconds 0.0.0.0:8000->8000/tcp vm-api_web_1

If you look at the ports, the database is not exposing a port because docker-compose will manage the networking between the containers.

An import concept to understand is: Everything inside your compose file is isolated, and you have to explicitly expose ports. You’ll get a warning if you try run a service on a port that is already exposed.

Connecting to the DB

To connect to the db, update your settings file with:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql_psycopg2',

'NAME': os.environ.get('DATABASE_NAME', 'postgres'),

'USER': os.environ.get('DATABASE_USER', 'postgres'),

'HOST': os.environ.get('DATABASE_HOST', 'db'),

'PORT': 5432,

},

You will also need to add psycopg2 2 to requirements.txt.

You can create a seperate settings file and then set the environment variables. I think it feels better to put environment variables in docker compose:

web:

environment:

- DJANGO_SETTINGS_MODULE=my_project.settings.dockerIf you wanted to do it in the image:

ENV DJANGO_SETTINGS_MODULE=my_project.settings.deployYou can run docker-compose build again.

Remember adding a new package in requirements.txt needs a new build

ok so docker-compose up for changes to docker-compose.yaml and docker-compose build for changes to Dockerfile

docker-compose restart is for when you make changes to your compose file.

Test that Environment Vars are Working

$ web python manage.py shell

Python 3.7.4 (default, Sep 11 2019, 08:25:59)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

(InteractiveConsole)

>>> import os

>>> os.environ.get('DJANGO_SETTINGS_MODULE')

'window.settings.docker_local'

Adding Persistence to the DB

Adding persistence to a db is done by adding a volume:

db:

image: postgres:9.5

volumes:

- data-volume:/var/lib/db

Then add a top level volume declaration:

An entry under the top-level

volumeskey can be empty, in which case it uses the default driver configured by the Engine (in most cases, this is thelocaldriver)

volumes:

data-volume:

That will do this:

Creating volume "vm-api_data-volume" with default driverNuclear Option

Sometimes you just need to blow things up and start again:

docker-compose stop

docker-compose rm -f -v

docker-compose up -d

Ordering

It is advised to list your services in the order you expect them to start in docker-compose.

You can also add depends_on: db but it isn’t completely reliable.

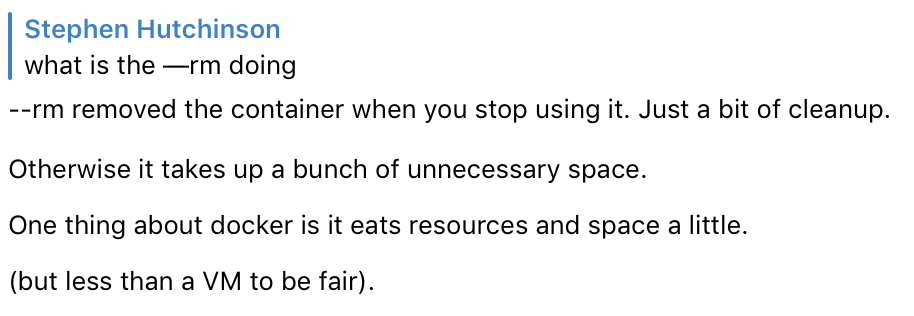

Running Commands in your dev container

There are commands like migrating and creating a superuser that need to be run in your container.

You can do that with:t

docker-compose run --rm web ./manage.py migrateA great alias to add to your (control node) host is:

alias web='docker-compose run --rm web'Refresh your shell:exec $SHELL

Then you can do: web ./manage.py createsuperuser

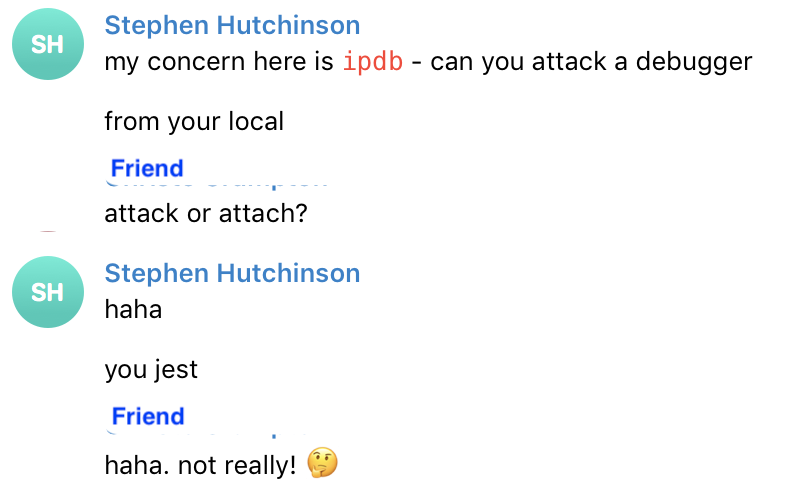

Debugging in your Development Docker Container

Well this has gone on long enough…catch the containerisation of a CI workflow and Deloyment of containers in Part 2 of Help me Understand Containerisation