I have a gitlab version control and CI instance running.

I also have a Harbor registry running.

Now I want to ensure the I can build and push images from gitlab onto harbor using gitlab’s continuous integration and continuous deployment (CI/CD).

First Steps

- Create a git repo on gitlab with your Dockerfile

- Create a user and project on harbor to store your image

- Take note of the credentials needed to login to the registry

I am basing this tutorial on a similar tutorial that uses gitlab’s container registry

.Gitlab-ci.yml

The core to controlling and managing the tasks that gitlab ci will do, is the .gitlab-ci.yml file that lives inside you repo.

So you can version control your CI process.

You can read the full reference guide for the .gitlab-ci.yml file

Create this file.

Specify the image and stages of the the CI Process

image: docker:19.0.2

variables:

DOCKER_DRIVER: overlay2

DOCKER_TLS_CERTDIR: ""

DOCKER_HOST: tcp://localhost:2375

stages:

- build

- push

services:

- docker:dindHere we set the image of the container we will use to run the actual process. In this case we are running docker inside docker.

I have read that this is bad, but it my first attempt so I just want it to work – then I will optimise.

We set the stages which are labels – that will be used later to link to tasks.

Other variables are need for this to work DOCKER_DRIVER, DOCKER_TLS_CERTDIR and DOCKER_HOST

Before and After

Much like a test suite’s setUp and tearDown phase the CI process has a before_script and after_script tasks that will be executed before and after respectively.

In our case that involves logging in and out of our registry.

For this phase the username and password of your harbor user is required (or your cli_secret if you are using openidc to connect).

Importantly these should not be written here in plaintext but rather set up as custom environment variables in gitlab.

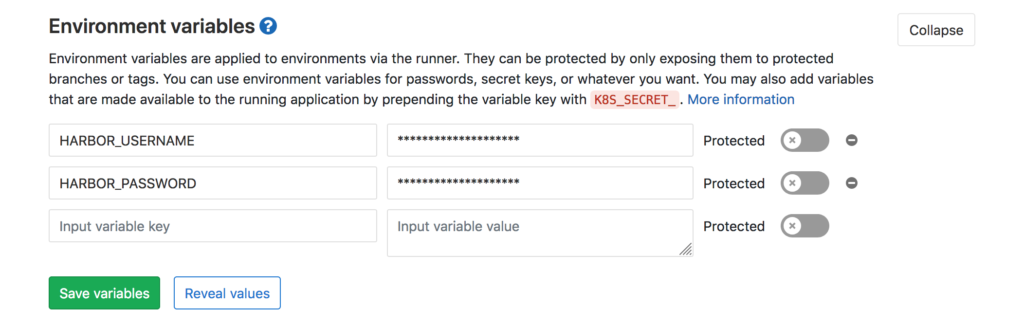

In gitlab:

- Go to Settings > CI/CD

- Expand the Environment variables section

- Enter the variable names and values

- Set them as protected

I used: HARBOR_REGISTRY, HARBOR_USERNAME, HARBOR_REGISTRY_IMAGE and HARBOR_PASSWORD

So now we can set in the yaml:

before_script:

- echo -n $HARBOR_PASSWORD | docker login -u $HARBOR_USERNAME --password-stdin $HARBOR_REGISTRY

- docker version

- docker info

after_script:

- docker logout $HARBOR_REGISTRYImportant if you are using a

xxx$robotaccount you must set this explicitly and not as an environment variable as it will not save correctly as $ escapes in shell

Setting the tasks in the build stages

The build stage:

Build:

stage: build

script:

- docker pull $HARBOR_REGISTRY_IMAGE:latest || true

- >

docker build

--pull

--cache-from $HARBOR_REGISTRY_IMAGE:latest

--tag $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA .

- docker push $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHAThis pulls the last image which will be used for the cache when building a new image. The image is then pushed to the repo with the commit SHA1 as the version.

Tag Management

According to the tutorial it is good pratice to keep your git tags in sync with your docker tags.

This bit takes the commit hash of git and then uses that to tag the image. The advantage here is that it is automatic and prevents duplication – the disadvantage is it does not use semantic versioning for the image tags and you have to know the hash to deploy to where you want.

Push_When_tag:

stage: push

only:

- tags

script:

- docker pull $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA

- docker tag $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

- docker push $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_REF_NAMEThe Full .gitlab-ci.yaml

.gitlab-ci.yml

image: docker:18-git

variables:

DOCKER_DRIVER: overlay2

DOCKER_TLS_CERTDIR: ""

DOCKER_HOST: tcp://localhost:2375

stages:

- build

- push

services:

- docker:18-dind

before_script:

- echo $HARBOR_USERNAME

- echo -n $HARBOR_PASSWORD | docker login -u 'robot$gitlab_portal' --password-stdin $HARBOR_REGISTRY

- docker version

- docker info

after_script:

- docker logout $HARBOR_REGISTRY

Build:

stage: build

script:

- docker pull $HARBOR_REGISTRY_IMAGE:latest || true

- >

docker build

--pull

--cache-from $HARBOR_REGISTRY_IMAGE:latest

--tag $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA .

- docker push $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA

Push_When_tag:

stage: push

only:

- tags

script:

- docker pull $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA

- docker tag $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_SHA $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

- docker push $HARBOR_REGISTRY_IMAGE:$CI_COMMIT_REF_NAMELets Try it out

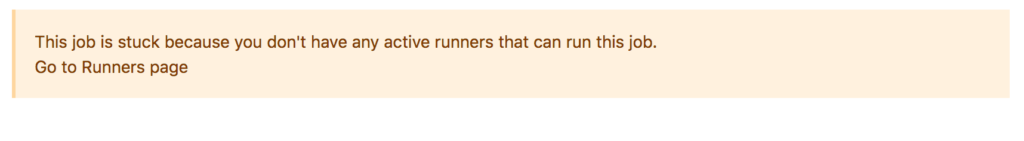

After going to gitlab and checking the CI jobs after commiting the .gitlab-ci.yml file, the job was in a pending / stuck stage.

The reason was because I had not setup a runner. Probably beyond the scope of what you came here for…so you can skip this next section.

Setting Up a Runner

Gitlab runners run the tasks in .gitlab-ci.yml.

There are 3 types:

- Shared (for all projects)

- Group (for all projects in a group)

- Specific (for specific projects)

On gitlab.com you would use the shared runners, on your own instance you can setup a shared runner.

Shared runners are available to all projects and I like that to simplify things.

But Where should gitlab runners live?, the answer is where ever you want.

The problem with this is there are so many options, there is no way to just start and get a runner going

- Should I use k8s? – docs are long and horrendous

- Should I use the vm gitlab is on?

- Should I use a vm gitlab is not on?

I suppose if you read the full gitlab runners docs you will have a better idea, but I don’t have a week.

So I am going to try the k8s way.

You will need 2 variables you can get from: <my-gitlab-instance>/admin/runners

gitlabUrlrunnerRegistrationToken

So follow the steps in the k8s runner setup resulting in you creating a values.yaml file.

There are many additional settings to change, but I just want it to work now.

You must set

privileged: truein your values file of the helm chart if you are doing docker in docker – as we are

values.yml:

gitlabUrl: https:///

runnerRegistrationToken: ""

imagePullPolicy: IfNotPresent

terminationGracePeriodSeconds: 3600

concurrent: 5

checkInterval: 60

rbac:

create: false

clusterWideAccess: false

metrics:

enabled: true

runners:

image: ubuntu:16.04

privileged: true

pollTimeout: 180

outputLimit: 4096

cache: {}

builds: {}

services: {}

helpers: {}

securityContext:

fsGroup: 65533

runAsUser: 100

resources: {}

affinity: {}

nodeSelector: {}

tolerations: []

hostAliases: []

podAnnotations: {}

podLabels: {} Searching for the Helm Chart

Add the gitlab helm chart repo and search for the version you want:

helm repo add gitlab https://charts.gitlab.io

helm search repo -l gitlab/gitlab-runnerThe gitlab-runner version must be in sync with the gitlab server version: https://docs.gitlab.com/runner/#compatibility-with-gitlab-versions

In my case (11.7.x):

gitlab/gitlab-runner 0.1.45 11.7.0 GitLab RunnerCreate the k8s namespace:

kubectl create namespace gitlab-runnerInstall the helm chart:

helm install --namespace gitlab-runner gitlab-runner -f values.yml gitlab/gitlab-runner --version 0.1.45Unfortunately…I got an error:

Error: unable to build kubernetes objects from release

manifest: unable to recognize "": no matches for kind

"Deployment" in version "extensions/v1beta1"Problem is that in k8s 1.16 some api’s changed but the helm chart at that time still specifies the old version. So now I have to clone the runner repo and fix the deployment.

Fixing the Helm Chart

Get the helm chart

helm fetch --untar gitlab/gitlab-runner --version 0.1.45then find the deployment and change:

apiVersion: extensions/v1beta1to:

apiVersion: apps/v1Add the selector:

spec:

selector:

matchLabels:

app: {{ include "gitlab-runner.fullname" . }}Change the values.yaml inn the repo and finally install from the local changes:

helm install --namespace gitlab-runner gitlab-runner-1 .

NAME: gitlab-runner-1

LAST DEPLOYED: Fri Jun 26 10:24:51 2020

NAMESPACE: gitlab-runner

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Your GitLab Runner should now be registered against the GitLab instance reachable at: "https://xxx"*BOOM!!!

The runner should now be showing as a shared runner at: https://<my-gitlab-instance>/admin/runners

Enable the Shared Runner on your Repo

Run the job again…go to Ci/CD -> Pipelines -> Run Pipeline

Permission error on K8s instance

Running with gitlab-runner 11.7.0 (8bb608ff)

on gitlab-runner-1-gitlab-runner-7487b4cf77-lz9cr 11AFa4Fw

Using Kubernetes namespace: gitlab-runner

Using Kubernetes executor with image docker:19 ...

ERROR: Job failed (system failure): secrets is forbidden: User "system:serviceaccount:gitlab-runner:default" cannot create resource "secrets" in API group "" in the namespace "gitlab-runner"I think this means the service account that helm created does not have the ability to create secrets.

Get all service accounts:

kubectl get sa -AView the specific service account:

kubectl get sa default -o yaml -n gitlab-runnerIt is important to look at kubernetes managing and configuring service accounts. I found this stackoverflow question that gives us the quick fix

So let us edit the serivce account and give it permission (I don’t want to give it cluster admin):

kubectl edit sa default -n gitlab-runnerWell it rejected the

rulekey and documentation is too much or too sparse

I just took the easy / insecure option:

$ kubectl create clusterrolebinding default --clusterrole=cluster-admin --group=system:serviceaccounts --namespace=gitlab

clusterrolebinding.rbac.authorization.k8s.io/default createdThat worked but now that user has cluster admin rights. So be wary.

Results

Cloning repository...

Checking out eb9823f7 as master...

Skipping Git submodules setup

$ echo -n $HARBOR_PASSWORD | docker login -u $HARBOR_USERNAME --password-stdin $HARBOR_REGISTRY

Login Succeeded

$ docker version

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

Client: Docker Engine - Community

Version: 19.03.12

API version: 1.40

Go version: go1.13.10

Git commit: 48a66213fe

Built: Mon Jun 22 15:42:53 2020

OS/Arch: linux/amd64

Experimental: false

Running after script...

$ docker logout $HARBOR_REGISTRY

Removing login credentials for xxx

ERROR: Job failed: command terminated with exit code 1Partially successful…accordinng to this issue it is a common problem with dind.

So we need to ensure that the runner is set as privileged: true and then set the TCP port of the docker host.

To redo that (not required if you started from the top)

helm upgrade --namespace gitlab-runner gitlab-runner-1 .Conclusion

Done. It is working:

Job succeeded